AI Isn’t Good Enough

On a recent cross-country trip—I (Paul) drove from California to Illinois and back again. On the drive, I saw a sign I had never seen before: In a McDonald’s window it said $1,000 SIGNING BONUS. That was not a thing when I was a teenager doing minimum-wage jobs. No one paid you $1,000 signing bonuses to work for fast-food restaurants.

These bonuses have been around for a few years now, having started during Covid when the U.S. workforce fell into a wormhole and disappeared. But instead of going away, they persist, and bonuses have seemingly gotten even larger.

This got us thinking, unsurprisingly. It ties into a theme we have been rolling around, one we’re passionate about. Explaining what is going on, why it is important, and its relevance for investing will require some groundwork, so bear with us for a few paragraphs.

There is a persistent structural imbalance in the U.S. workforce: too few people for all the jobs, for the most part. It resists all efforts to reduce it, driven by a host of factors, including demand growth, an aging society, retirements, lower immigration, and skill mismatches, all of which conspire to create an unprecedented shortage of workers.

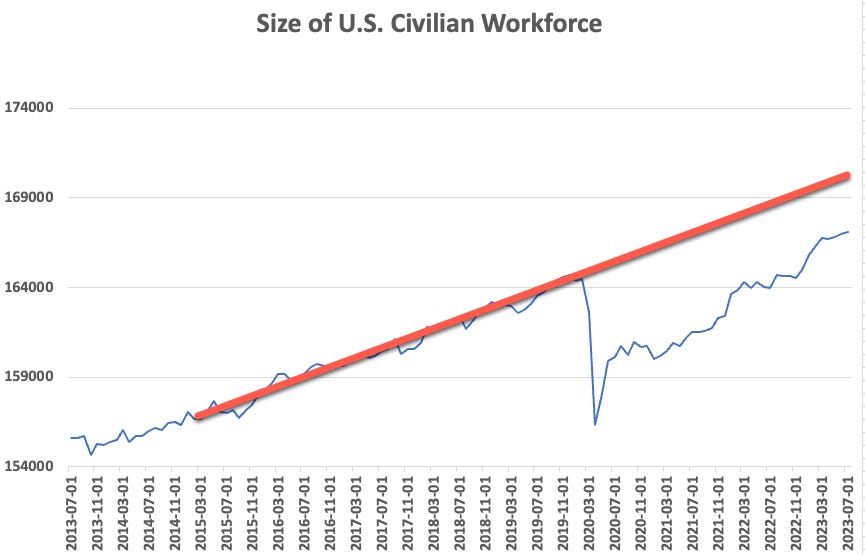

You can see some of this in the participation rate among U.S. workers. It fell sharply during Covid and hasn’t completely recovered since. Participation rates remain a full percentage point below pre-Covid levels, which, in a labor force the size of the U.S., is stark.

You can also see this in the labor force size. While the U.S. civilian labor force is larger than it was pre-Covid, so is the U.S. economy. If the labor force had continued to grow more or less in line with history and GDP, we’d have almost 5 million more workers out there. But we don’t. The gap is shrinking—it was closer to 7 million a year ago—but it is still a very large number, and, given retirements, skill mismatches, and aging, it seems unlikely we will close that gap quickly, if ever.

It is important to be nuanced about this. The missing jobs are not in all areas. Arguably, we still have too many people in technology company middle management. For example, all eight of the Google-based authors of the seminal “transformers” paper that helped spark the current wave of large language models have now left Google to start various AI companies. As a friend and long-ago former Google employee reminded us, that is more people than there were senior vice presidents at Google in 2004. Technology is not, with a few exceptions, where jobs are going wanting.

But almost everywhere else needs people. Badly. Across retail, restaurants, manufacturing, trades, and on and on, companies are struggling to hire. And this brings us back to the cross-country trip observation and that “signing bonus” sign in a McDonald’s window.

The absence of human workers has become a limiting factor on economic growth in the U.S. Absent a wave of immigration, which creates its own problems, politically and otherwise, and doesn’t necessarily fill the observed skill gaps, something needs to change. Historically, when this has happened—labor became more expensive than capital—economies have responded with automation, so we should expect that again today.

So, as promised, let’s circle back from demography and economics to technology, investments, and automation. As we’ve written previously, what’s important about this wave of automation is how it is more skewed toward jobs that can be described as requiring “tacit knowledge,” where we know what to do but can’t always create programmatic ways of doing things. These jobs are not assembly lines, so simply throwing capital (orthodox automation) at the problem doesn’t work.

That is why, in a sense, the current wave of AI has come along at the perfect time. It is the first automation technology to be applicable to tacit knowledge, to tasks where we can’t describe in a linear ABC way how inputs turn into outputs. To that way of thinking, we should embrace and not fear the current wave of technological change, in that it can help with the observed shortages in U.S. workers, and do so in a replicable and continuous way, not unlike traditional automation.

The trouble is—not to put too fine a point on it—current-generation AI is mostly crap. Sure, it is terrific at using its statistical models to come up with textual passages that read better than the average human’s writing, but that’s not a particularly high hurdle. Most humans are terrible writers and have no interest in getting better. Similarly, current LLM-based AI is very good at comparing input text to rules-based models, impairing the livelihood of cascading stylesheet pedants who mostly shouted at people (fine, at Paul) on StackExchange and Reddit. Now you can just ask LLMs to write that code for you or check crap code you’ve created yourself.

But that’s not enough. We are quickly reaching the limits of current AI, whether because of its tendency to hallucinations, inadequate training data in narrow fields, sunsetted training corpora from years ago, or myriad other reasons. We are pushing against the limits of what current AI can do, and that has unintended consequences, which we will return to in a moment. Most observers haven’t realized this yet, but they will.

Our view is that we are at the tail end of the first wave of large language model-based AI. That wave started in 2017, with the release of the transformers paper (“Attention is All You Need”) by those Google researchers, and ends somewhere in the next year or two with the kinds of limits people are running up against. It ends partly because of constraints inherent in the current approach, but also because of technological and cost limits related to training models, whether wide or narrow. This wave has been terrific for a few companies, especially Nvidia, but it will be thought of in the future as mostly about piping the AI house, at most. The key component of this current wave is that it is characterized by scarcity—training data, models, chips, and AI engineers—and the accompanying high costs that scarcity brings.

Before explaining which waves come next, and what that will mean, we will now digress into why it’s so important we rethink how we think about automation. Because there is a huge risk that we don’t, and this opportunity goes wanting. And that is to take nothing away from legitimate concerns, like existential risk, but we have a near-term problem: The workforce wormhole is eating the economy.

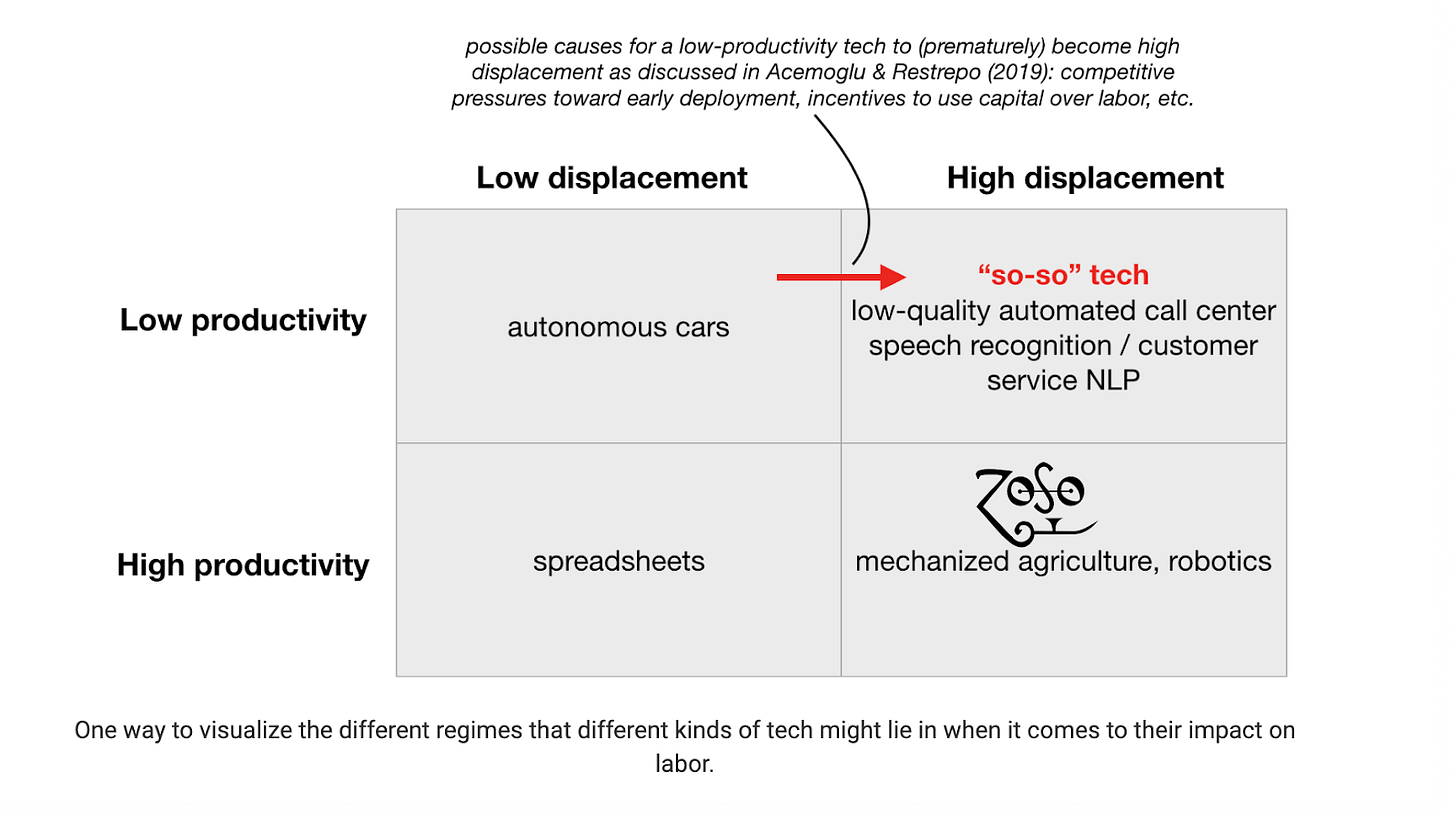

In an important 2019 paper (“Automation and New Tasks: How Technology Displaces and Reinstates Labor”) by Acemoglu and Restrepo, the authors develop and test important ideas about the nature of automation. While the models are highly quantitative, they can be summarized in simpler terms. In essence, the authors show that for automation to have widespread benefits it must deliver high productivity gains to more than compensate for the high displacement of workers.

Here are the authors from the paper itself: ”...automation always reduces the labor share in value-added and may reduce labor demand even as it raises productivity”. Of course, there are opposing forces, as they say: “The effects of automation are counterbalanced by the creation of new tasks in which labor has a comparative advantage”. In other words, and this is a trope often delivered nuance-free by technology boosters, technology’s displacement effects can be outweighed if new tasks are created where labor has an advantage over automation.

This is a huge “if.” Not all waves of automation create jobs as speedily as they displace them. And, even more importantly for our purposes, not all waves of automation deliver bursts of productivity that compensate for the displacement. Acemoglu and Restrepo coined the phrase “so-so automation” for a particularly insidious and increasingly common form of tech-enabled automation, where there is high worker displacement without commensurate productivity gains impact—where minimal human flourishing is created.

Has this happened? All the time, Acemogul and Restrepo show. And, worryingly, it is happening increasingly frequently in recent decades, blinding us to the effects of so-so automation on productivity gains:

Our empirical decomposition suggests that the slower growth of employment over the last three decades is accounted for by an acceleration in the displacement effect, especially in manufacturing, a weaker reinstatement effect, and slower growth of productivity than in previous decades.

You can see their idea in schematic terms in the following 2x2 grid. So-so automation is in the top right; the kind of automation we want, which we think of as flourishing, is in the bottom right quadrant. We should never confuse these two forms of automation, given that high worker displacement must be more than compensated for by high productivity gains if we are to avoid massive economic disruption, especially in an economy where workers are scarce and inflexible, as we have already discussed. The box in the lower right should be our goal, call it, by way of contrast with so-so, “Zoso” automation (Yes, that is a Led Zeppelin pun; yes, Led Zeppelin’s best song is still “Kashmir”), where we get worker displacement, but we also get even more explosive productivity growth.

How do we know if something is so-so vs Zoso automation? One way is to ask a few test questions:

1. Does it just shift costs to consumers?

2. Are the productivity gains small compared to worker displacement?

3. Does it cause weird and unintended side effects?

Here are some examples of the preceding, in no particular order. AI-related automation of contract law is making it cheaper to produce contracts, sometimes with designed-in gotchas, thus causing even more litigation. Automation of software is mostly producing more crap and unmaintainable software, not rethinking and democratizing software production itself. Automation of call centers is coming fast, but it is like self-checkout in grocery stores, where people are implicitly being forced to support themselves by finding the right question to ask.

This is a problem. These are all examples of so-so automation. They are happening all over, and they are typical of much of what many investors are investing in and many entrepreneurs are creating. These products and services will, inevitably, displace a huge number of people, but they will not drive human flourishing.

So, what does this mean? It means we need much better AI. Or we need much worse AI. The second point first: Much worse AI would have minimal worker displacement effects, making it less economically fraught in its effects, and given that the U.S. economy can work within those limits. We are in a middle zone, however, with AI able to displace huge numbers of workers quickly, but not provide compensatory and broader productivity benefits.

This brings us back to our wave theory of what is happening in AI. As we wrote above, we think, contrary to hyperbole, we are already at the tail end of the current wave of AI. We are bumping against many of its limits, which is, in turn, constraining the state space of what can be done with AI that drives explosive productivity growth versus so-so automation.

What comes next? In our view, the next wave will last until perhaps 2030, with a diversity of new models (like tree of thoughts models), ubiquitous/cheap GPUs, and an inevitable commoditization of LLMs with an accompanying explosion of open source. At the same time, the current era of massive, intermittently-updated models will seem quaint, like mainframe computers. The next generation will be lighter weight and much more specific, a good example of which is Retrieval Augmented Generation (RAG), where the old LLM mainframe becomes mostly a syntax and grammar generator. All the action is local and informed by proprietary data, whether real-time or historical, or both.

To get from here to there a bunch of things have to change. For starters, we need far better tools. For example, our current generation of developer tools are perfectly adapted for a world that no longer exists, and they are mostly being kludged with new features that make them more narrowly useful, rather than redesigned altogether. Without a change in the tooling, we cannot see past recency bias into the kinds of service that might drive explosive productivity growth. We will be trapped in that box of so-so automation.

We also need to stop thinking in such siloed terms about technology. It is possible that, with the right tools, the most explosive growth in flourishing-promoting automation will be in areas other than those where the last few waves played out, that is, outside white-collar office work. For example, there are early signs that, with minor advances, huge gains are possible in life sciences. We are already seeing new drugs and targets, new ways of analyzing cell types and gene expression, and so on. Our blinkers must not prevent us from being open to the idea that true Zoso automation will come mostly in unexpected areas, like biology.

At an even higher level, however, we need to break free from decades of recent thinking about what automation is for. As Acemoglu and Restrepo show in their 2019 paper, the productivity benefits of recent technology-related automation have been declining, with more and more of it being directed at low productivity displacement.

Decades ago, business theorist Michael Hammer made bank promoting the idea of using technology to reengineer society. It didn’t happen, partly because there was no way to do it with then-current technology, but mostly because reengineering became a consultant code word for cost-cutting and layoffs.

This time can be different. By realizing where we are in AI waves, and by recognizing the limits of current technology, we can see why the limits of current LLMs already dictate what, how, and when we automate. Whether we do so will dictate whether this next wave leads to explosive improvements or just incremental so-so automation. We need to look past the limits of current AI technology if we are to break free from the past few decades of so-so automation and compensate for the gravitational forces dragging the U.S. workforce into that wormhole.